Too many servers, too many equipment, too many logs to check. Better to consolidate them all in one location. Right? Splunk would be my first choice but it is quite expensive and the free tier of 500Mb is a joke. So I stumbled across Graylog . Graylog Open is free and you can install it either in a docker container or on a Linux server. Docker is not my strong point so…

Prerequisites

- MongoDB 5.x or 6.x

- Elasticsearch 7.10.2 (you can use OpenSearch but I prefer the former)

- And of course an Ubuntu server 22.04 fully updated with enough space for your logs 🙂

MongoDB

The official Grayl documentation mentions MongoDB 5.x. I am not settling for less than the latest one so let’s install 6.x.

Login to your new server and get root prvivileges

sudo suImport the MongoDB repository public key

curl -fsSL https://www.mongodb.org/static/pgp/server-6.0.asc| sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/mongodb-6.gpgCreate a repository list for MongoDB

nano /etc/apt/sources.list.d/mongodb-org-6.0.listAdd the repository

deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu focal/mongodb-org/6.0 multiverseSave and Exit

Install the prerquisites

wget http://archive.ubuntu.com/ubuntu/pool/main/o/openssl/libssl1.1_1.1.1f-1ubuntu2.16_amd64.deb

dpkg -i ./libssl1.1_1.1.1f-1ubuntu2.16_amd64.debUpdate the indexes and install the latest stable version

apt update

apt install -y mongodb-orgEnable MongoDB and verify it is running

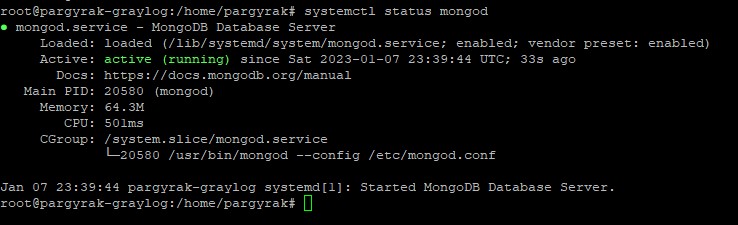

systemctl daemon-reload

systemctl enable mongod

systemctl restart mongod

systemctl status mongod

Active and running means you are all set

Elasticsearch

Get the repository key

curl -fsSL https://artifacts.elastic.co/GPG-KEY-elasticsearch | gpg --dearmor -o /usr/share/keyrings/elastic.gpgCreate a repository list for Elastisearch

nano /etc/apt/sources.list.d/elastic-7.x.listAdd the repository. Be careful, official Gralyog documentation, at the time of this writing has an error

deb [signed-by=/usr/share/keyrings/elastic.gpg] https://artifacts.elastic.co/packages/oss-7.x/apt stable mainUpdate the indexes and install the latest stable open source version

apt update

apt install elasticsearch-ossEdit the configuration file

nano /etc/elasticsearch/elasticsearch.ymlUnder Cluster insert the following. The first line exists but it is commented out

cluster.name: graylog

action.auto_create_index: falseEnable Elasticsearch and verify it is running

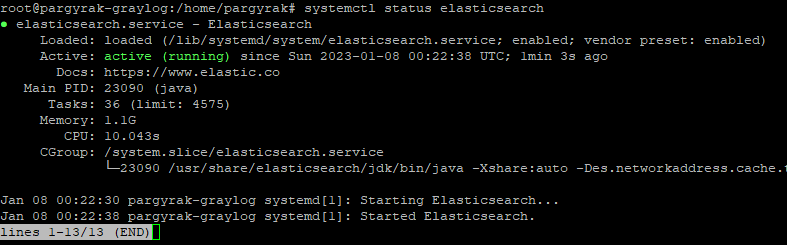

systemctl enable elasticsearch

systemctl restart elasticsearch

systemctl status elasticsearch

Active and running means you are good to go

Graylog

Finally, now that the prerequisites are out of the way, time to install Graylog itself

Get the latest repository and keys and install

wget https://packages.graylog2.org/repo/packages/graylog-5.0-repository_latest.deb

dpkg -i graylog-5.0-repository_latest.deb

apt update

apt install graylog-server Generate a secret to secure the user passwords. You will need to install pwgen

apt install pwgen

pwgen -N 1 -s 96Make a note of this as we are going to use it for the password_secret variable

Create you admin password hash. Do not forget you password!

echo -n "Enter Password: " && head -1 </dev/stdin | tr -d '\n' | sha256sum | cut -d" " -f1Again make a note as you are going to need it for the root_password_sha2 variable

Edit the configuration file

nano /etc/graylog/server/server.confand add the hashes to the variables mentioned above and bind the ip of the server interface you want

password_secret = <first hash>

root_password_sha2 = <admin user hash>

http_bind_address = xxx.yyy.zzz.xyz:9000Save and exit

Start, enable the graylog server and verify it is running

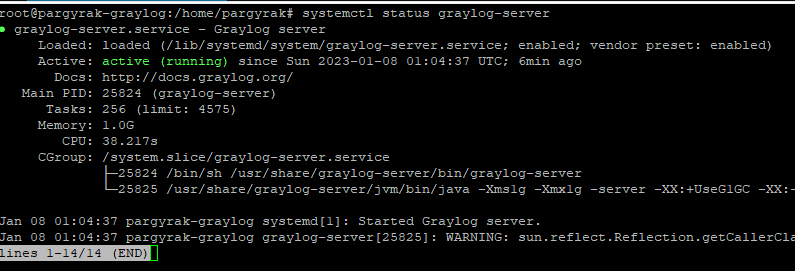

systemctl start graylog-server

systemctl enable graylog-server

systemctl status graylog-server

Conrgats! It is working. But can you access it? Nope, you need to open the port on your firewall

ufw allow 9000/tcpNow you can! Username is admin and password the one you set.